Science isn’t a subject, it is a process, a methodology for inquiry and developing knowledge and understanding. But what counts as a science? What criteria, what check-list is there to know if a subject can be counted as a science and having testable theories?

Essentially, replicability- to repeatedly yield precise results using a highly controlled methodology to infer cause and effect from testable predictions. A theory should be simplistic in terms of its unification of explanation (Psychology has a multitude of paradigms that are contradictory- consider the social and physiological approaches). Theories should be falsifiable, the notion that whilst there may be evidence for them they just have not been disproved…….yet. This was classically illustrated by David Hume, “No amount of observations of white swans can allow the inference that all swans are white, but the observation of a single black swan is sufficient to refute that conclusion.” Often referred to as the induction fallacy.

Psychology has a vested interest in this argument as it is often viewed by the ‘pure sciences’ as wishy -washy, ‘let’s just sit around and talk about our feelings kind of subject.’ However, many of those in Psychological research would take the view that they have just as an equal right to be considered as a science – however with the paradox of having to ‘prove’ its worth, Psychology suffering from an inferiority complex on a collective level. An interesting article in the Guardian explores some of the finer point further here.

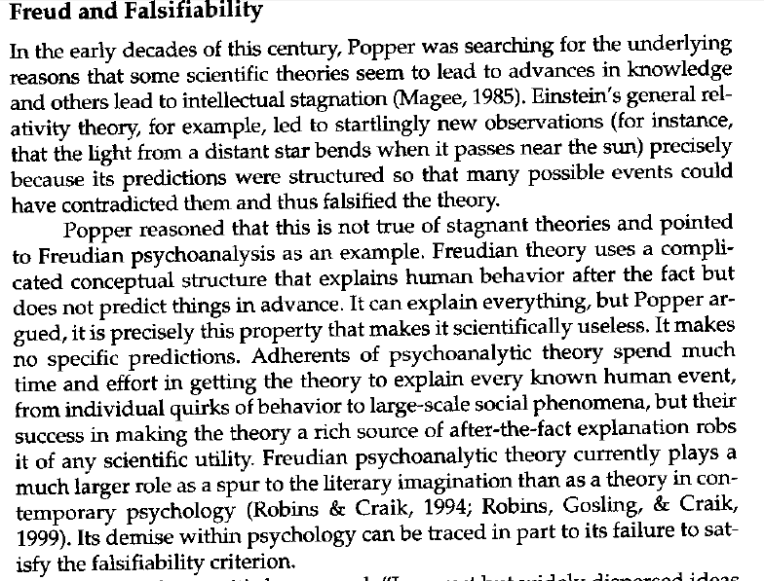

The in joke to summarise this view is that Psychology has been suffering from ‘Physics envy’. A play on words of a Freudian concept that is used to exemplify the position of Psychology. Freud and the Psychodynamic approach has been the perspective in Psychology which has been the material used most effectively to reinforce this point. Philosopher Karl Popper has been attributed with being the most vocal on his views particularly on Freud. The scientific process is now based on the hypothetico-deductive model Popper (1935). Popper suggested that theories/laws about the world should come first and these should be used to generate expectations/hypotheses which can be falsified by observations and experiment.

Have a watch of this insightful video which discusses the Psychodynamic approach and other examples of methods used within the history of psychology that are examples of a non-scientific approach. The answer to the question posed to the beginning of the post?………. history will answer yes. Psychologist researching in Universities tend to work from a multidisciplinary perspective collaborating on research rather than working in isolation from a specific approach and taking a more applied stance. Here is an example of what modern psychological research looks like....a long way from the Freudian model often wrongly considered to be the remaining foundations of the subject.